Results (Anticipated)

This reciprocal mentorship program was designed to generate impact on two levels.

For Participants

The program was expected to provide more than just support from a single mentor. By creating space for trust and belonging, participants could take risks in a safe environment, build confidence, and strengthen their leadership capacity. They were also expected to form expanded networks and connections across generations, gaining insight into both new and traditional ways of working. These exchanges would allow younger employees to understand institutional knowledge while more experienced staff could learn fresh approaches and tools. Over time, participants could also expect improved communication skills, a stronger sense of agency in their roles, and greater visibility within the organization.

For the Organization

At the organizational level, expected results went beyond individual development. The program was designed to generate new knowledge assets and measurable skill growth, while also building a stronger leadership pipeline for the future. Over time, it could contribute to cultural improvements by embedding mentorship into everyday practice, higher retention through stronger belonging, and greater cross-generational collaboration that reduces silos. The program was also expected to enhance employee engagement, support succession planning, and provide leaders with early insight into emerging talent who might otherwise remain under the radar.

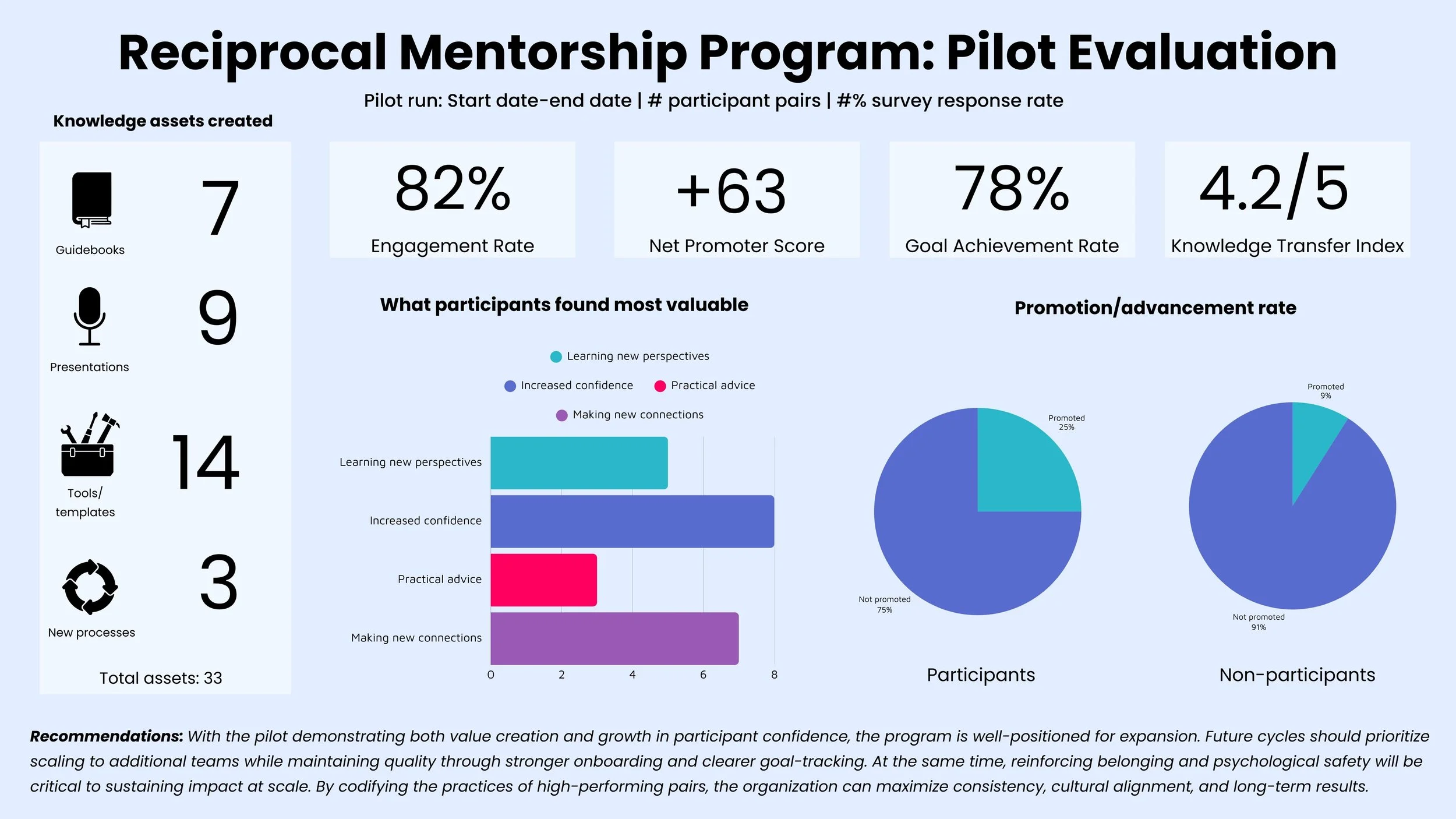

To illustrate how these results could be monitored and shared, I created a sample dashboard with mock data. The dashboard shows how survey responses, reflection inputs, and mentorship artifacts could be translated into clear, actionable insights for leaders. This dashboard is also included in the Evaluation Toolkit as an example of how raw data can be turned into a visual story, making patterns easier to spot and insights easier to act on.

Evaluation Framework

To evaluate the program’s impact, I created a framework that measured both the quality of mentoring relationships and the organizational outcomes they produced.

Belonging and psychological safety are prerequisites for effective learning and for the kind of vulnerability this program requires. Reciprocal mentorship depends on participants being willing to share openly, take risks, and learn from one another. Without those conditions in place, the program is unlikely to generate outcomes such as knowledge transfer, leadership development, or stronger relationships across generations.

The evaluation framework therefore examined two dimensions:

Relationship quality → assessed through belonging, trust, and psychological safety—indicators of whether participants felt supported and able to learn together.

Organizational outcomes → assessed through measures such as skill development (via pre-/post-assessments), confidence gains, and knowledge assets created.

By comparing these two dimensions, the evaluation surfaces not only how participants experienced their mentorship but also how those experiences translated into results the organization cares about.

Four-Quadrant Analysis

I adapted Brinkerhoff’s Success Case Method into a four-quadrant model that compares relationship quality with results achieved. The model helps explain why some mentorships thrive, why others struggle, and what the organization can learn from both.

Explore the interactive graphic below: Each quadrant can be clicked to show what that type of partnership is likely to reveal, sample questions, and action insights.

Data Evaluation Toolkit

To support organizations that may want to apply this approach, I developed an Evaluation Toolkit. It combines straightforward data collection methods with easy-to-use analysis frameworks so programs can adapt evaluation to their own context and capacity.

The toolkit includes:

Data collection options → surveys, pulse checks, reflection prompts, interviews, and artifact submissions.

Analysis frameworks → guides for applying models such as Brinkerhoff’s Success Case Method, Guskey’s 5 Levels, RE-AIM, and Bennett’s Hierarchy.

Reporting and storytelling tools → templates for dashboards, KPI summaries, and participant stories that make results easy to share with leaders.

Scaling and sustainability guidance → strategies for embedding evaluation into ongoing practice.

The full toolkit, including templates, models, and examples, can be explored here.

Designer Reflection

Designing a program and evaluation framework without a specific organization or audience in mind was both a challenge and an opportunity. Without knowing the culture, roles, or existing systems of a workplace, I had to anticipate a wide range of possibilities. That required me to build more scaffolds and tools than any one program would ever use, so that organizations could adapt what they needed while leaving the rest aside.

The same applied to participants. Not knowing the target audience meant I needed to design with autonomy and flexibility at the core—principles that are central to adult learning. By creating options, I aimed to support different learning styles, levels of experience, and professional goals, while still providing enough structure for pairs to succeed. I also included tools for problem-solving and training resources to set participants up for success when challenges inevitably arose.

I anchored the design in wider trends and research. With baby boomers retiring in large numbers, many organizations face gaps in knowledge transfer and succession planning. Reciprocal mentorship offers a way to address both issues while also building belonging across generations. I grounded the program in research on organizational development, psychology, belonging, and mentorship models so it would serve as an evidence-based response to the challenges organizations face today.